Integration Testing on AWS with CDK

Use Stacks, Constructs and AWS SSM Parameters to easily test your serverless infrastructure workloads

TL;DR: Implement integration testing on AWS by copying my CDK setup and ParameterClient implementation, available in this demo repository on GitHub.

"In this blog post, integration tests are defined as those verifying the correct interaction of various components within a larger system. These components together form a larger system. Components here mean something different than programming constructs. Programming constructs can be tested by making use of unit tests. For instance, in the AWS cloud, components can be services like AWS Lambda functions or AWS SQS queues, building functional sub-systems. Our focus will be on using AWS CDK and Typescript for infrastructure as code. While we use Typescript, the concepts discussed are applicable across different programming languages.

Why Integration Tests?

Integration tests validate the correct workings of workloads

In AWS's serverless stack, you often use multiple services to build a portion of a workflow. These complex interactions can't be fully tested with unit tests, as they require deployed infrastructure. Furthermore, mocking this infrastructure on a local workstation fails to replicate the AWS cloud environment, sometimes making it an unfeasible approach.

AWS SQS is a good example of an AWS service that largely depends on its integration with other AWS services such as IAM and its producers and consumers. It is best to test it within the AWS cloud. Similarly, datalake frameworks such as Hudi, which operate on top of S3 and Glue, transcend the boundaries of single services. This complexity necessitates their validation through integration tests.

Integration tests can be used to monitor regression from working implementations

When you create new functionality consisting of IaC with CDK and source codes for lambdas, you can now validate it to work with integration tests. If you make sure these integration tests pass before you merge your changes to the main branch, you create a sort of checkpoint. In case you need to make some changes to your source code or infra definition, you can use the integration test to validate that it does not break your previously working workload.

One big pro of using serverless services for these integration tests is that they typically only incur costs when they are invoked and process data. So you can keep integration-specific infra deployed in your dev environment as long as you want. You will only pay for it when executing the test.

Integration Tests are part of your CICD

Just like unit tests, it's crucial to ensure all integration tests pass before merging feature branches into the main branch. However, unlike unit tests, integration tests require deployed infrastructure to run effectively. This necessitates defining all necessary infrastructure within a construct that encapsulates all resources needed for these tests.

In a typical engineering team, you would have multiple developers working on features at the same time. Hence, we need to be able to run integration tests per feature branch. This can be achieved by deploying a development CDK stack per feature branch to a development environment, i.e. a dedicated AWS account.

Structuring Your CDK for Integration Tests

Our CI/CD setup and infrastructure are modeled using the AWS Cloud Development Kit (CDK). Here's how we structure it:

Stacks and collections

Environments as CDK Stacks: Each environment (Production, Acceptance, Development) is represented by a single CDK stack. This approach simplifies deployment, requiring only one stack per environment. Stack IDs are hardcoded (e.g., 'prod', 'acc', 'dev'), with the development stack being an exception. To accommodate multiple feature branches, we make the development stack ID unique by appending the branch name.

Collections for Resource Grouping: Collections are the top abstraction layer, grouping components for deployment in environment-specific stacks. In my projects, we use two main collections:

Platform Collection: Deployed in the 'prod' environment to handle user requests and in 'acc' for testing platform deployment.

IntegTests Collection: Used in the 'dev' environment for integration testing before merging feature branches, and optionally in 'acc' for ad-hoc integration tests."

CDK constructs; collections and components. All boxes in the diagram above represent CDK constructs. Note the containment relationships between constructs; the platform collection construct contains 3 different component constructs.

Components

Within our platform, 'components' are CDK constructs that provide specific functionalities. For instance, in an event-driven architecture, a 'transformation' component might include a Lambda function, an SQS queue, and CloudWatch alarms. These components are designed to expose public methods, allowing for seamless integration with other parts of the system, both upstream and downstream.

In the context of integration testing, it's often necessary to modify these integrations. For example, when testing 'ComponentA', known for sending messages to a downstream component's SQS queue, we mock this queue. This allows us to make assertions on the messages sent, enabling isolated testing of individual components or small groups of components.

Running Integration Tests

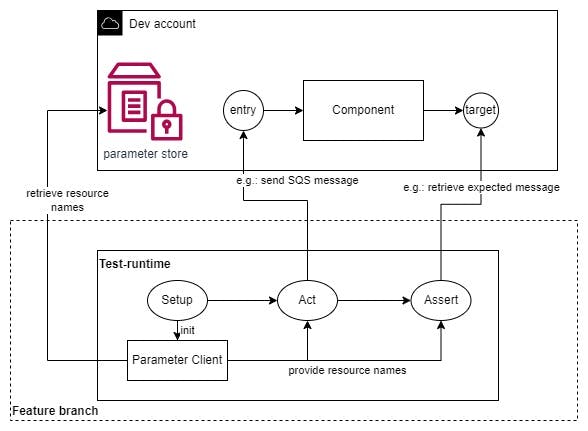

In our integration test setup, we depend on the AWS SSM Parameter store to retrieve necessary configuration data for interacting with AWS resources. This process is streamlined using the ParameterClient class, which is instantiated with specific stage and branch arguments to fetch relevant parameters.

The versatility of ParameterClient allows it to be adapted to various deployment scenarios and CDK implementations. For instance, see this CDK code snippet below that demonstrates creating parameters in the SSM Parameter store. For a complete example, refer to https://github.com/Martijn-Sturm/cdk-integ-test/blob/main/src/lib/components/demoTable.ts

export class DemoTable extends Construct {

// ... Instantiation of infra and other code

new ssm.StringParameter(this, 'tableName', {

parameterName: generateParameterName(this, 'tableName'),

stringValue: this.table.tableName,

});

The generateParameterName implementation:

export function generateParameterName(scope: Construct, name: string) {

const paramName = `${scope.node.path}/${name}`;

const withoutStackName = paramName.split('/').slice(1).join('/');

if (

!Object.values(ParameterRegistry).includes(

withoutStackName as ParameterRegistry

)

) {

throw new Error(`Parameter name ${paramName} is not in the registry`);

}

return ensureLeadingSlash(paramName);

}

Here you can see how these parameters are retrieved within an integration test.

const branch = getBranch();

const stage = getStageByBranch(branch);

const ssmClient = new SSMClient({ region: 'eu-west-1' });

// The client constructs parameter keys:

// For dev: '<feature branch>Dev/IntegTests/<component name>/<parameter name>'

// For acc: 'Acc/IntegTests/<component name>/<parameter name>'

const paramClient = new ParameterClient(stage, ssmClient, branch);

// ParameterRegistry is an enum, which allows you to do validation

// during deployment. It also eases retrieval of the correct resource names

params = await paramClient.retrieveParameters({

actTargetQueueURL: ParameterRegistry.integTests_Demo_QueueUrl,

assertTableName: ParameterRegistry.integTests_Demo_tableName

});

For a visual overview of the integration testing process, refer to the image below.

Shortcomings

Usage of Unit-testing frameworks for Integration Tests

We utilized Jest for writing our integration tests, which, while functional, isn't ideal. Designed primarily for unit testing, Jest assumes test cases are independent and can be executed in parallel, typically completing within seconds. However, integration tests often cover a broader range of operations, including network traffic, and take longer. This leads to more complex, non-deterministic outcomes that often require human interpretation upon failure.

Given these challenges, integration tests in Jest require breaking down into smaller, sequential steps. This isn't a native feature of Jest and needs workarounds, like using flag variables, to implement. Recognizing these limitations is crucial for developing effective integration testing strategies in similar environments.

Integration tests are flaky

Another significant challenge in AWS integration testing involves the flakiness and non-determinism of tests. In a distributed cloud environment, factors such as network latency, varying service response times, and intermittent connectivity issues can lead to inconsistent test results. These unpredictable factors can make tests pass in one instance and fail in another, under seemingly identical conditions. This inconsistency not only complicates the debugging process but also undermines the reliability of the tests. It necessitates the implementation of robust error-handling and retry mechanisms, as well as the consideration of potential timing issues. Addressing these aspects is crucial to ensure that integration tests reliably reflect the system's behavior under varied conditions, which is particularly challenging when dealing with the inherent complexities of a cloud environment like AWS.

An example of a retry mechanism within a test can be found in test/repeat.ts. This makes the test more robust for delays introduced by the network.

Conclusion

In summary, this exploration into integration testing on AWS using CDK has highlighted its essential role in ensuring the functionality and reliability of serverless applications. While challenges like the limitations of Jest for integration testing, test flakiness, and the complexities of cloud environment configuration are evident, the detailed insights provided aim to equip developers with practical strategies to navigate these issues. As the landscape of cloud computing continues to evolve, so too will the approaches to effective integration testing. Staying informed and adaptable to these changes is key for developers and teams striving to maintain high-quality standards in their AWS-based solutions.